Create articles from any YouTube video or use our API to get YouTube transcriptions

Start for freeIntroduction to Linear Algebra

Linear algebra is a fundamental branch of mathematics that deals with linear equations and their representations in vector spaces. At its core, linear algebra is about solving systems of linear equations, which have numerous applications in science, engineering, and technology. This article delves into the key concepts of linear algebra, focusing on the methods used to solve systems of linear equations.

The Fundamental Problem of Linear Algebra

The central problem in linear algebra is solving a system of linear equations. Typically, we deal with n equations and n unknowns, which is considered the "normal" case. To approach this problem, we can use three different perspectives:

- The row picture

- The column picture

- The matrix form

Let's explore each of these perspectives in detail.

The Row Picture

The row picture involves looking at each equation in the system individually. This approach is particularly intuitive for systems with two equations and two unknowns, as it can be easily visualized in a two-dimensional plane.

Example: 2x2 System

Consider the following system of equations:

- 2x - y = 0

- -x + 2y = 3

In the row picture, we plot each equation as a line in the xy-plane:

-

For the first equation (2x - y = 0), we can identify two points:

- (0, 0) satisfies the equation

- (1, 2) also satisfies the equation

-

For the second equation (-x + 2y = 3), we can identify two points:

- (-3, 0) satisfies the equation

- (-1, 1) also satisfies the equation

When we plot these lines, their intersection point represents the solution to the system. In this case, the lines intersect at the point (1, 2), which is the solution to both equations.

Limitations of the Row Picture

While the row picture is intuitive for 2x2 systems, it becomes increasingly difficult to visualize as we add more equations and unknowns. For a 3x3 system, we would need to visualize the intersection of three planes in three-dimensional space, which is challenging for most people to picture mentally.

The Column Picture

The column picture offers an alternative perspective that becomes increasingly valuable as we deal with larger systems. In this approach, we focus on the columns of the coefficient matrix rather than individual equations.

Understanding the Column Picture

In the column picture, we view the system of equations as a linear combination of vectors. Each column of the coefficient matrix represents a vector, and we aim to find the right combination of these vectors to produce the solution.

For our 2x2 example:

[2 -1] [x] = [0] [-1 2] [y] [3]

We can rewrite this as:

x[2] + y[-1] = [0] [-1] [2] [3]

This representation shows that we're looking for a linear combination of the column vectors [2, -1] and [-1, 2] that equals the vector [0, 3].

Visualizing the Column Picture

To visualize this, we can plot the column vectors in the plane:

- The first column vector is (2, -1)

- The second column vector is (-1, 2)

The solution (x, y) represents the coefficients needed to combine these vectors to reach the point (0, 3).

Advantages of the Column Picture

The column picture becomes particularly powerful when dealing with higher-dimensional systems. It allows us to think about linear combinations of vectors in n-dimensional space, which is a more abstract but often more useful way of understanding the problem.

The Matrix Form

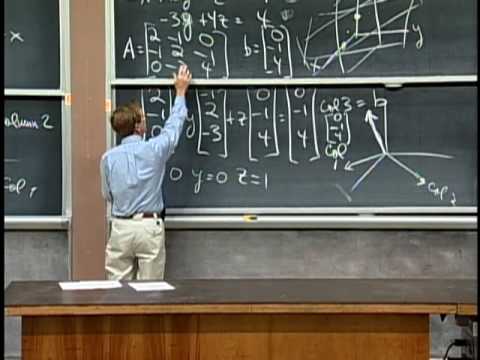

The matrix form provides a compact way to represent and manipulate systems of linear equations. It combines the insights from both the row and column pictures into a single, powerful notation.

Matrix Equation

For our 2x2 example, the matrix form is:

[2 -1] [x] = [0] [-1 2] [y] [3]

We can write this more compactly as:

Ax = b

Where:

- A is the coefficient matrix

- x is the vector of unknowns

- b is the vector of constants on the right-hand side

Matrix Multiplication

Understanding matrix multiplication is crucial for working with the matrix form. There are two ways to think about matrix-vector multiplication:

- Row-wise: Each element of the resulting vector is the dot product of a row of A with x.

- Column-wise: The result is a linear combination of the columns of A, with coefficients from x.

The column-wise interpretation aligns closely with the column picture, reinforcing the idea of linear combinations.

Solving Systems of Linear Equations

With these perspectives in mind, we can approach solving systems of linear equations more systematically.

Methods for Solving

-

Graphical Method: For 2x2 systems, we can plot the lines and find their intersection point. This corresponds to the row picture.

-

Substitution Method: We solve for one variable in terms of others and substitute it into the remaining equations.

-

Elimination Method: We systematically eliminate variables to transform the system into an equivalent, easier-to-solve form. This method, also known as Gaussian elimination, is particularly powerful for larger systems.

-

Matrix Methods: We can use matrix operations to solve the system, including methods like inverse matrices and Cramer's rule.

Existence and Uniqueness of Solutions

When solving systems of linear equations, we may encounter three possible scenarios:

-

Unique Solution: The system has exactly one solution. This occurs when the number of independent equations equals the number of unknowns, and the equations are consistent.

-

No Solution: The system is inconsistent, meaning there's no set of values that satisfies all equations simultaneously.

-

Infinite Solutions: The system has infinitely many solutions. This happens when there are fewer independent equations than unknowns.

Linear Independence and Span

Two crucial concepts in linear algebra that relate to solving systems of equations are linear independence and span.

Linear Independence

A set of vectors is linearly independent if none of the vectors can be expressed as a linear combination of the others. In the context of solving equations, linear independence of the column vectors of A ensures that each equation contributes unique information to the system.

Span

The span of a set of vectors is the set of all possible linear combinations of those vectors. When solving Ax = b, we're essentially asking whether b is in the span of the columns of A.

Applications of Linear Algebra

Understanding how to solve systems of linear equations is crucial for many real-world applications:

-

Computer Graphics: Transformations and projections in 3D graphics are often represented as systems of linear equations.

-

Economics: Input-output models and equilibrium analysis often involve solving large systems of linear equations.

-

Engineering: Structural analysis, circuit analysis, and control systems frequently require solving linear systems.

-

Machine Learning: Many algorithms, including linear regression and principal component analysis, rely on solving systems of linear equations.

-

Physics: Quantum mechanics and relativity theory make extensive use of linear algebra concepts.

Advanced Topics in Linear Algebra

As we delve deeper into linear algebra, several advanced topics build upon the foundation of solving systems of linear equations:

Eigenvalues and Eigenvectors

Eigenvalues and eigenvectors are special scalars and vectors associated with square matrices. They have important applications in physics, engineering, and data science, particularly in areas like vibration analysis, quantum mechanics, and principal component analysis.

Vector Spaces and Subspaces

The concept of vector spaces generalizes the ideas we've discussed to more abstract settings. Subspaces, which are vector spaces contained within larger vector spaces, are particularly important for understanding the structure of solutions to systems of linear equations.

Linear Transformations

Linear transformations are functions between vector spaces that preserve vector addition and scalar multiplication. They provide a powerful framework for understanding many operations in linear algebra, including matrix multiplication.

Orthogonality and Projections

Orthogonality extends the idea of perpendicularity to higher dimensions. Projections, which involve finding the closest point in a subspace to a given vector, have numerous applications, including in least squares fitting and signal processing.

Computational Aspects of Linear Algebra

As systems of linear equations become larger, computational efficiency becomes increasingly important.

Numerical Methods

For large systems, direct methods like Gaussian elimination can become computationally expensive. Iterative methods, such as the Jacobi method or the Gauss-Seidel method, can be more efficient for certain types of large, sparse systems.

Stability and Conditioning

Numerical stability is crucial when solving systems on computers with finite precision arithmetic. The condition number of a matrix provides a measure of how sensitive the solution of a linear system is to small changes in the input.

Software Tools

Many software packages and libraries are available for solving systems of linear equations and performing other linear algebra operations. These include MATLAB, NumPy for Python, and LAPACK for lower-level implementations.

Conclusion

Solving systems of linear equations is the cornerstone of linear algebra. By understanding the row picture, column picture, and matrix form, we gain multiple perspectives on this fundamental problem. These concepts not only provide a foundation for more advanced topics in linear algebra but also serve as essential tools in numerous scientific and engineering applications.

As we've seen, the ability to solve systems of linear equations opens doors to a wide range of applications and more advanced mathematical concepts. Whether you're working in computer graphics, data science, engineering, or pure mathematics, a solid grasp of these fundamental ideas will serve you well.

As you continue your journey in linear algebra, remember that practice is key. Work through many examples, explore different types of systems, and don't hesitate to visualize problems when possible. With time and experience, you'll develop an intuition for these concepts that will prove invaluable in your mathematical and scientific endeavors.

Article created from: https://youtu.be/ZK3O402wf1c?feature=shared