Understanding Generative Adversarial Networks (GANs): From Theory to Implementation

An in-depth exploration of Generative Adversarial Networks (GANs), covering the mathematical foundations, architecture, and practical implementation details.

Check out the most recent SEO-optimized Deep Learning articles created from YouTube videos using Scribe.

An in-depth exploration of Generative Adversarial Networks (GANs), covering the mathematical foundations, architecture, and practical implementation details.

An in-depth exploration of Generative Adversarial Networks (GANs), covering the mathematical foundations, optimization techniques, and practical implementation considerations.

An in-depth exploration of F-divergences, their properties, and how they are used in generative adversarial networks (GANs) for estimating and sampling from unknown probability distributions.

An in-depth exploration of the Transformer architecture that powers large language models, covering key concepts like attention mechanisms, tokenization, and parallelization.

An in-depth exploration of how transformer models work, focusing on the attention mechanism and its role in processing text and other data types.

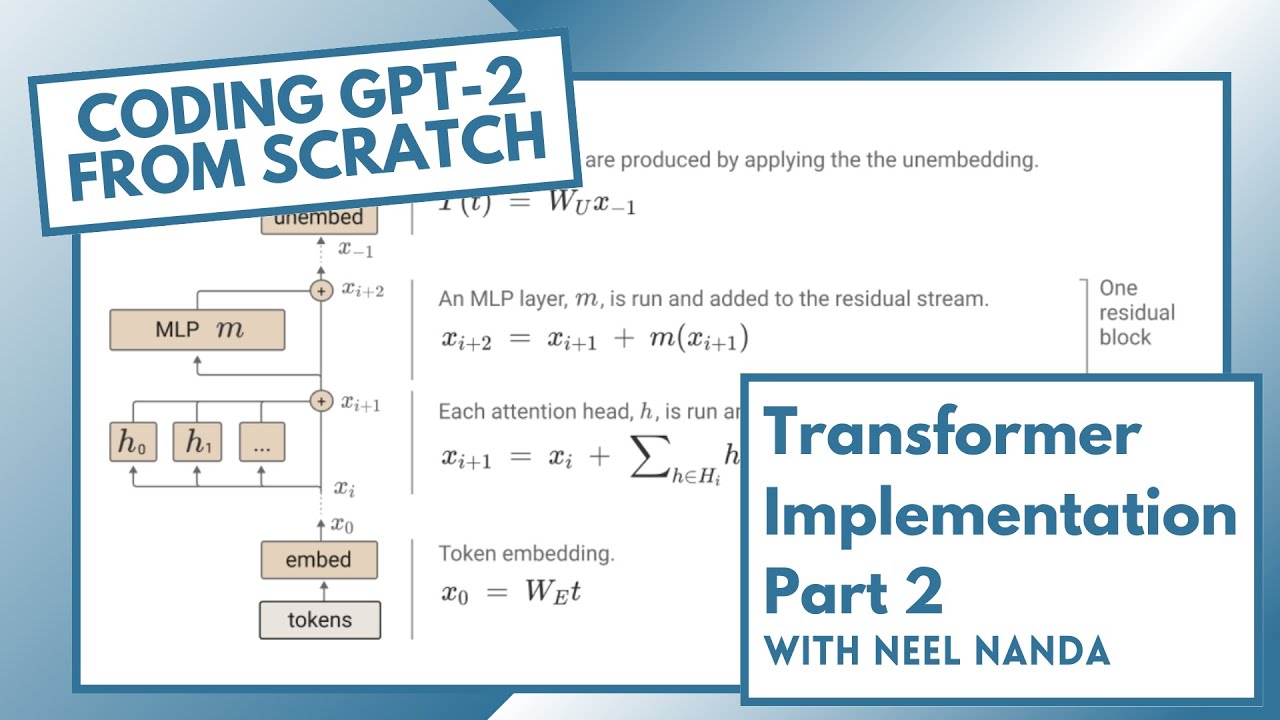

A detailed walkthrough of coding GPT-2 from scratch, covering all components of the Transformer architecture and how to train a small language model.

Explore the inner workings of Transformer models, the architecture behind modern language models like GPT-3. Learn about their structure, components, and how they process and generate text.

Explore the role of positional encoding in Transformers, focusing on ROPE and methods for extending context length. Learn how these techniques impact model performance and generalization.

Explore the evolution of machine learning optimization techniques, from basic gradient descent to advanced algorithms like AdamW. Learn how these methods improve model performance and generalization.