Create articles from any YouTube video or use our API to get YouTube transcriptions

Start for freeUnderstanding Tokenization in Language Models: A Deep Dive

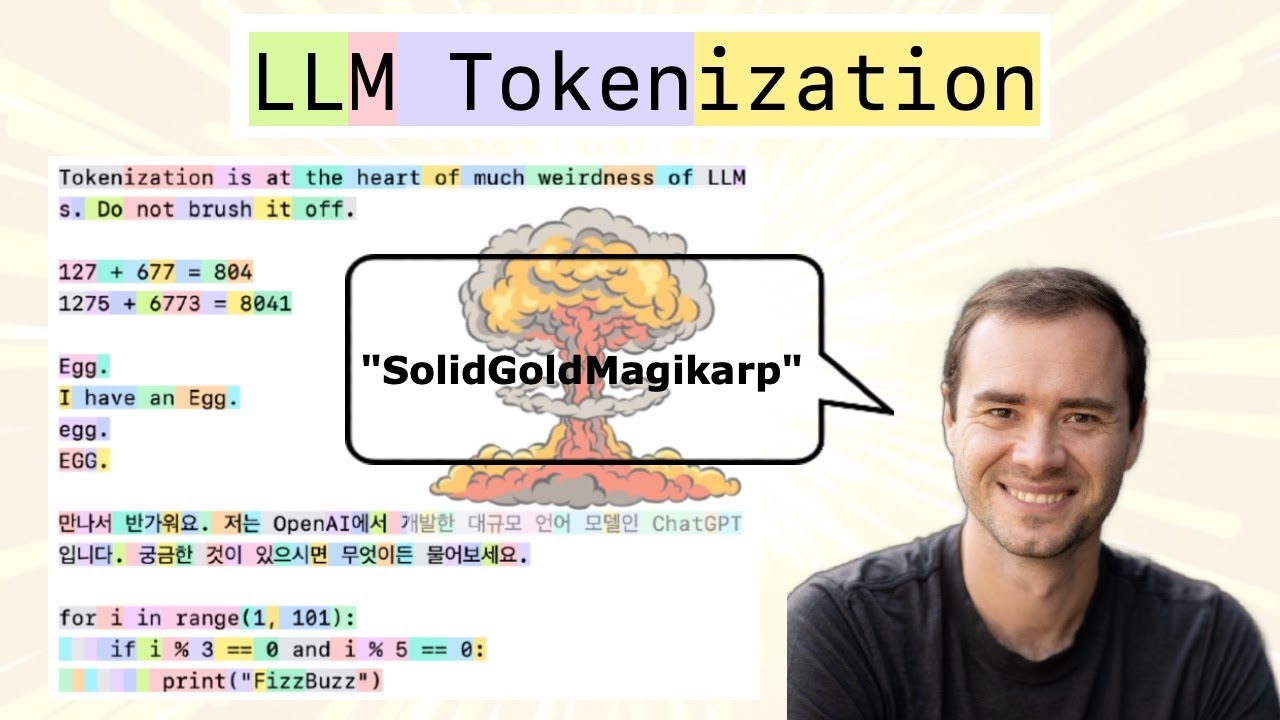

Tokenization is a foundational yet often complex aspect of working with large language models (LLMs). It plays a pivotal role in how these models process and understand text, impacting their performance across a variety of tasks. This article will explore the intricacies of tokenization, its challenges, and its significant influence on language model behavior.

What is Tokenization?

Tokenization is the process of converting strings of text into sequences of tokens, which are essentially standardized units of text. These tokens can take various forms, ranging from individual characters to more complex chunks of text. The method of tokenization directly affects how a language model perceives and processes the input text.

The Process of Tokenization

In its simplest form, tokenization involves creating a vocabulary of possible tokens and encoding text based on this vocabulary. For instance, a naive approach to tokenization could involve creating a vocabulary consisting of individual characters found in a dataset. However, state-of-the-art language models use more sophisticated methods, such as Byte Pair Encoding (BPE), to construct token vocabularies based on larger chunks of text.

Byte Pair Encoding (BPE)

BPE is a popular algorithm for tokenization in LLMs. It works by iteratively merging the most frequent pairs of characters or character sequences in a dataset until a predefined vocabulary size is reached. This method allows for efficient encoding of common words or phrases as single tokens, while also providing a mechanism for encoding rare words or phrases.

The Impact of Tokenization on LLM Behavior

Tokenization profoundly affects the behavior of language models in several ways:

-

Performance on Specific Tasks: The granularity of tokens influences how well an LLM can perform certain tasks. For example, character-level tokenization may hinder a model's ability to understand the context or meaning of words, impacting its performance on tasks requiring a deeper understanding of text.

-

Handling of Non-English Languages: The tokenization process can also affect how well LLMs handle non-English languages. Models trained with a tokenization scheme biased towards English may struggle with languages that use different scripts or have different linguistic structures.

-

Efficiency and Model Size: The choice of tokenization method affects the size of the model's vocabulary, which in turn influences the model's size and computational efficiency. A larger vocabulary requires more memory and computational resources, potentially making the model slower and more expensive to train and run.

Challenges and Considerations

While tokenization is a critical component of LLMs, it comes with its own set of challenges and considerations:

-

Complexity and Overhead: Designing an effective tokenization scheme can be complex and requires careful consideration of the trade-offs between granularity, model size, and performance.

-

Data Distribution and Bias: The tokenization process can introduce bias based on the distribution of data used for training. This can lead to models that perform well on certain types of text but poorly on others.

-

Special Tokens: The use of special tokens for encoding specific information or controlling model behavior adds another layer of complexity to tokenization. Managing these tokens and ensuring they are used appropriately requires careful design and implementation.

Conclusion

Tokenization is a fundamental yet intricate part of working with large language models. Its design and implementation significantly influence model performance, efficiency, and behavior. Understanding the nuances of tokenization is essential for anyone looking to develop or work with LLMs, as it lays the groundwork for how these models interpret and process the vast world of text.

For more detailed insights into tokenization and its impact on language models, visit the original video discussion here.